Hey Steem!

I’m Mark: I’m obsessed with cameras and computers and using technology to capture and manipulate light.

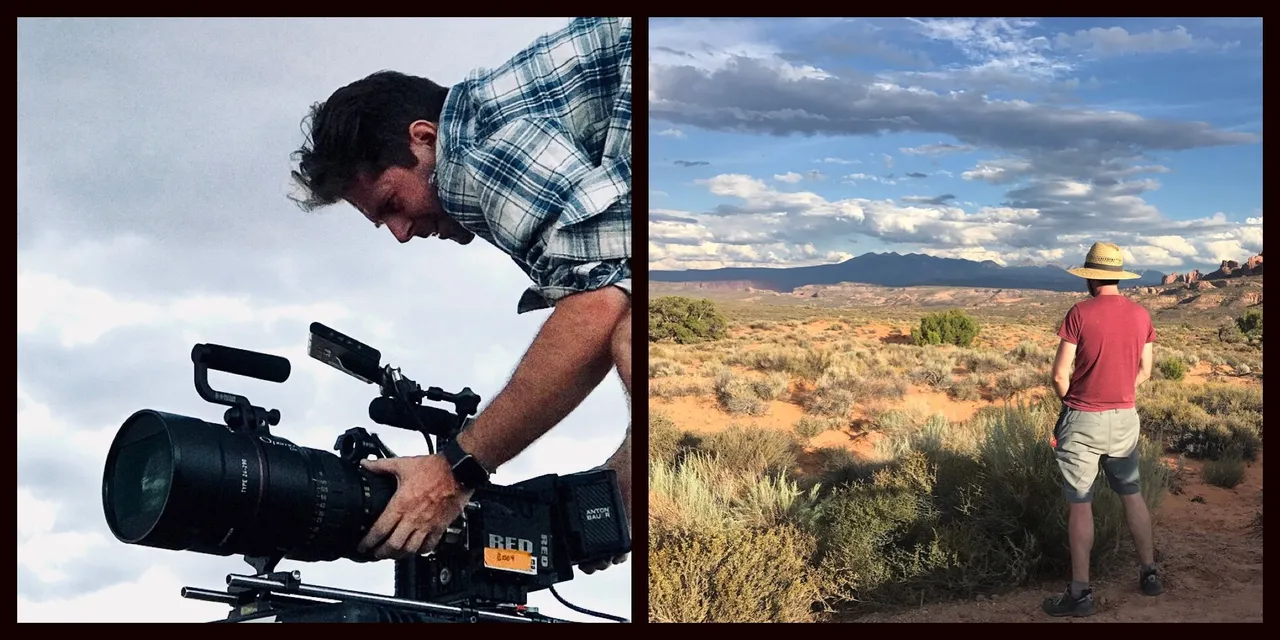

I'm from the PNW and spent a few years working in Seattle before making the leap to Los Angeles, where I do all kinds of freelance camera jobs for film, tv, commercial, and corporate (http://mgcine.com).

A recent shoot in Moab, UT for Eizo Displays and Phase One Camera Systems

In Seattle I spent 1.5 years on the marketing team for a product development firm called Synapse which had some of the finest electrical, mechanical, and software engineers I've ever met. They pulled of the Nike+ Fuelband and the Disney My Magic Band.

Then I spent the next 1.75 years working for a boutique production company called Orange LV where I cut my teeth on some of the most technical cinematography of my career, including Microsoft's gargantuan in-store video walls which had to be stitched together from several 6K Dragon clips. This is also where my fascination with Unity began as we were using it to pre-vis our photo/video work in the retail environments in VR. I was also doing photogrammetry's of different objects that our 3D modelers were working off of to create photo realistic assets.

Our first 360° camera at Joshua Tree

Upon moving to LA, I built on that fascination with @austindro. We pooled our money to buy 6 GoPro Hero4s and a cage to hold them all, then we began experimenting with shooting and stitching, understanding things like minimum action distances, where action could cross the stitch line, and how to light for 360°.

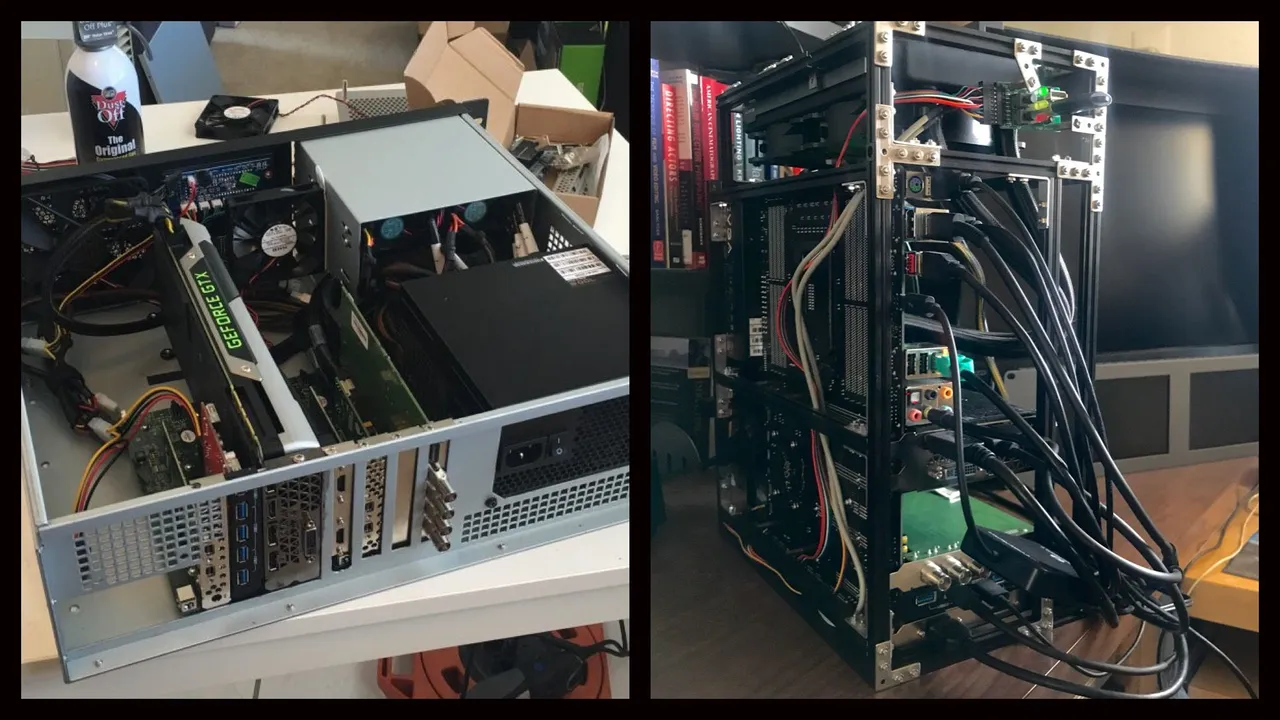

Going through this process allowed me to refine my approach, and it became obvious I would need to close the loop between editing and viewing, so I bought an Oculus Rift. But at the time there was only minimal Mac support left over from the DK2. I had a Mac Pro (trashcan), and tried everything I could at the time to make it work with Windows and an eGPU, but was finally left to sell off the Mac Pro and build a PC.

My custom eGPU [left], and custom PC [right]

Once I was up and running I noticed how boring 360° video was compared to VR video games. There was all this interaction and engagement from the games that justified putting on the headset, but I wasn't getting that same satisfaction from the video side of things. So I started a project to explore improving the experience and called it Hyperspace.

Hyperspace was built in Unity - although at the end I began to transition it into Unreal which is a better choice IMO - and allowed me to begin experimenting with 360° video inside of a video game engine. This meant I could work on collisions and triggers, free movement and interactivity, and social integration like avatars and chat. I built a few experiences for Hyperspace, including a Joshua Tree exhibit, an architecture pre-vis experience, and finally a fine art gallery from my friend Andrei Duman that showcases his photography. The gallery was in one of the Westfield malls but had to be closed down at the end of 2017, so I'm working to reopen it in WebVR.

Duman Gallery in WebVR, coming soon to dlux.io

Exploring all these questions around VR got my shipmate/roommmate/cellmate Panda (@disregardfiat) very interested as well. Together we rented out a church and renovated it to host private events so we could dig deeper into the overlap of the physical and virtual. We started a company together called OTOLUX that specializes in 360° capture and live stream, volumetric capture, intelligent lighting, and live animation. Basically it is the most futuristic media company we can imagine (http://otolux.la).

Things with our church didn't work out due to, ahem permits, but together we dramatically improved on the concept of Hyperspace. We coined a new term: dlux (decentralized limitless user experiences), and retooled the tech stack by switching from Unity to A-Frame, and from WebGL to WebVR. This gave us a much easier platform to code for since we both agreed it should be a Blockchain experience. STEEM became the obvious choice because it's already built, has a user base, is proof-of-brain, offers crypto incentive, and allows us to build our app on top. So that's what we did! Or, I should say, that's what Panda did (I helped where I could). He went on a three-week NOS-fueled code bender and whipped up a basic version built on open source tech already available. We setup an AWS EC2 instance and created our own IPFS node to pin files to so they are seeded for others (https://dlux.io).

I’m a big believer in Blockchain technology and confident in the vision of the future STEEM represents. I look forward to posting and engaging with the community!